Smart Navigation

How is it possible to upgrade a simple robot arm – so that it becomes an autonomously acting detective?

Our Smart Navigation Showcase provides the answer to this question!

Goal of the showcase

Innovations generated in the field of autonomous navigation and use cases for artificial intelligence can only work on the most sophisticated robots and not on older devices? – Think again!

Our showcase Smart Navigation shows how to realize even complex use cases such as the recognition and tracking of any object on a small, simple robot arm – even if you start with only one serial interface!

In addition, it demonstrates an example how the robot’s resulting live machine data can be processed intelligently and how they can be enriched and statistically evaluated.

The following video provides more details:

Mit dem Laden des Videos akzeptieren Sie die Datenschutzerklärung von YouTube.

Mehr erfahren

The Setup

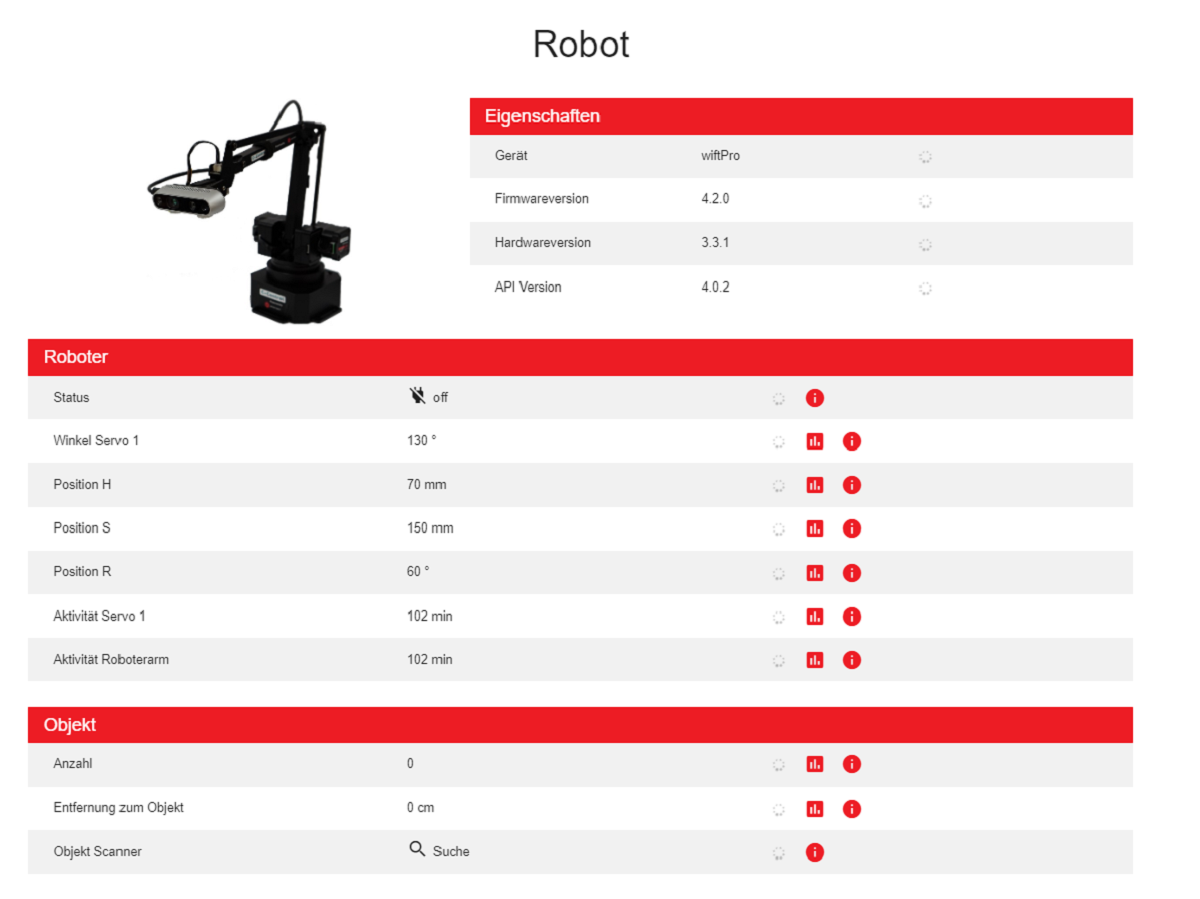

A Simple robot arm by UFACTORY (uarm). Upgraded with an INTEL Realsense stereo depth camera.

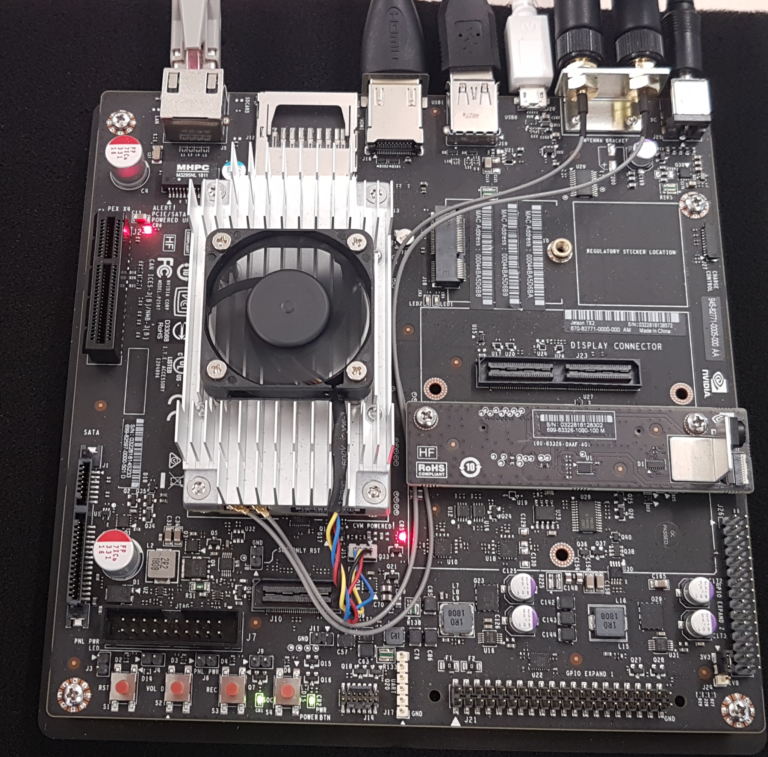

An NVIDIA Jetson TX-2 – The heart of the setup.

This is where the neural network runs and the robot control is coordinated.

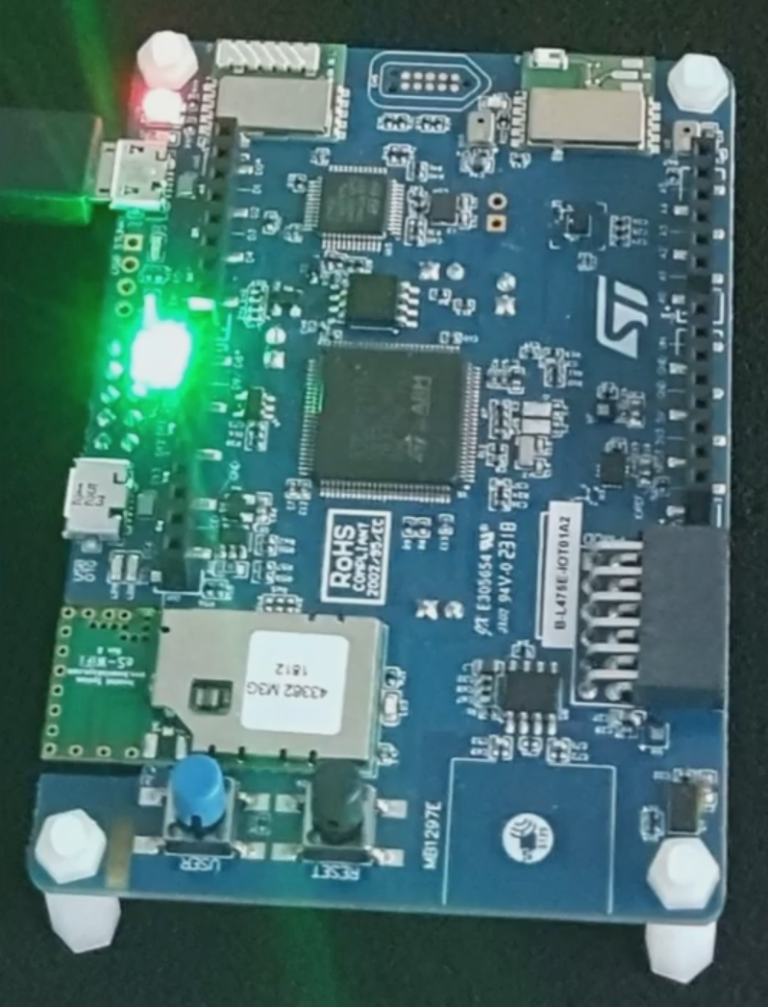

An IOT sensor node to extend the robot’s sensor value spectrum. It is now enabled to measure other physical parameters such as temperature, air pressure and vibration, to evaluate them statistically and to react to them.

Technology & Software

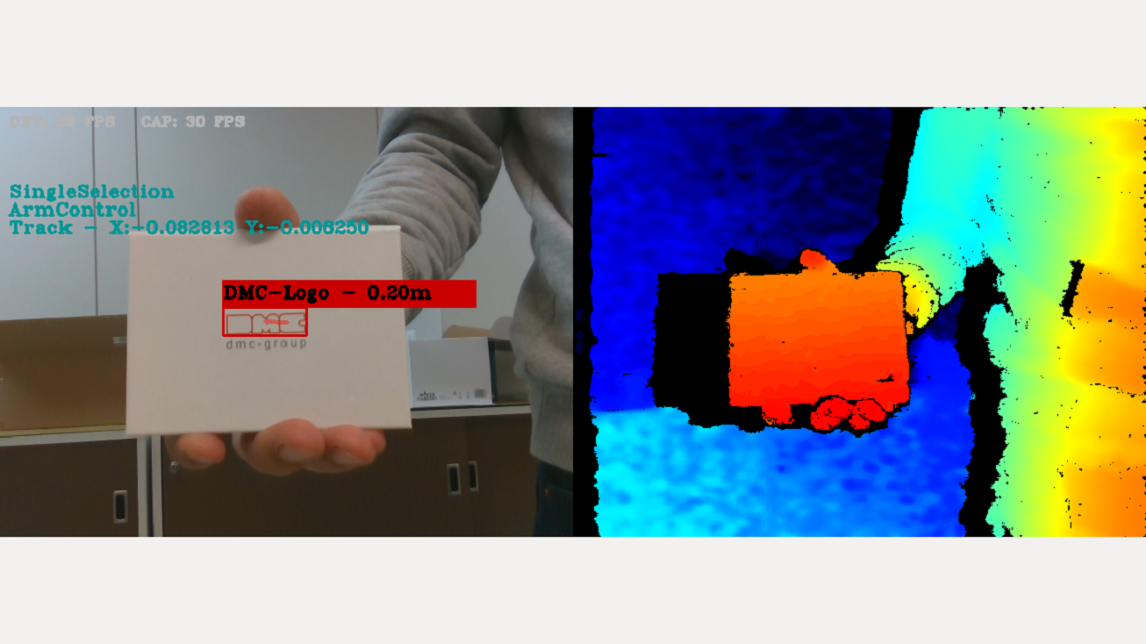

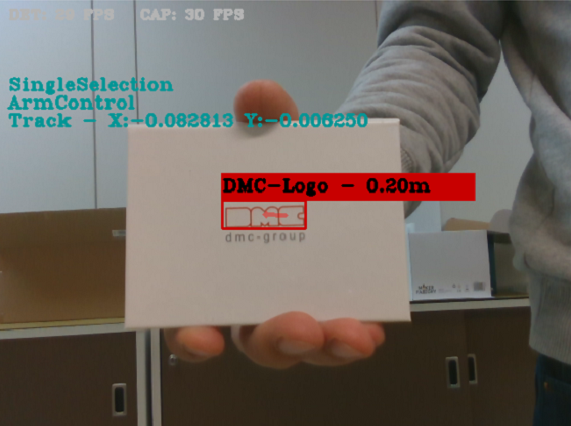

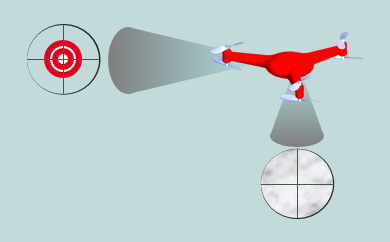

The INTEL Realsense Stereo Camera delivers two different types of videos.

On one side an RGB based video with a resolution of 640×480.

The 2nd video stream is based on an infrared depth image. Each pixel has a defined distance value which is specified by a colour coding system. A red pixel stands for a small distance to the camera, blue for a larger distance to the camera.

The basis of the Smart Navigation Showcase is the object recognition with tinyYOLO and the robot arm’s programmed real-time coordination. To show the (almost) unlimited possibilities in object recognition, we decided to do some training with our dmc-group logo.

After nearly 300 images in the training data set and several hours of computing time on our high-performance computers in Paderborn, the neural network was sufficiently trained to reliably recognize our logo. Although the training itself required a lot of resources, the trained network can even be run on smaller embedded devices – no high-end server required.

The operation of the robot arm itself is based on a frame-by-frame recognition of the object (camera delivers stable 30 fps). By calculating the difference of the trained object’s positions in the video stream, a motion vector (red arrow) is calculated and translated for the machine control. Now the robot arm is able to follow the trained object in real-time (within its degrees of freedom).

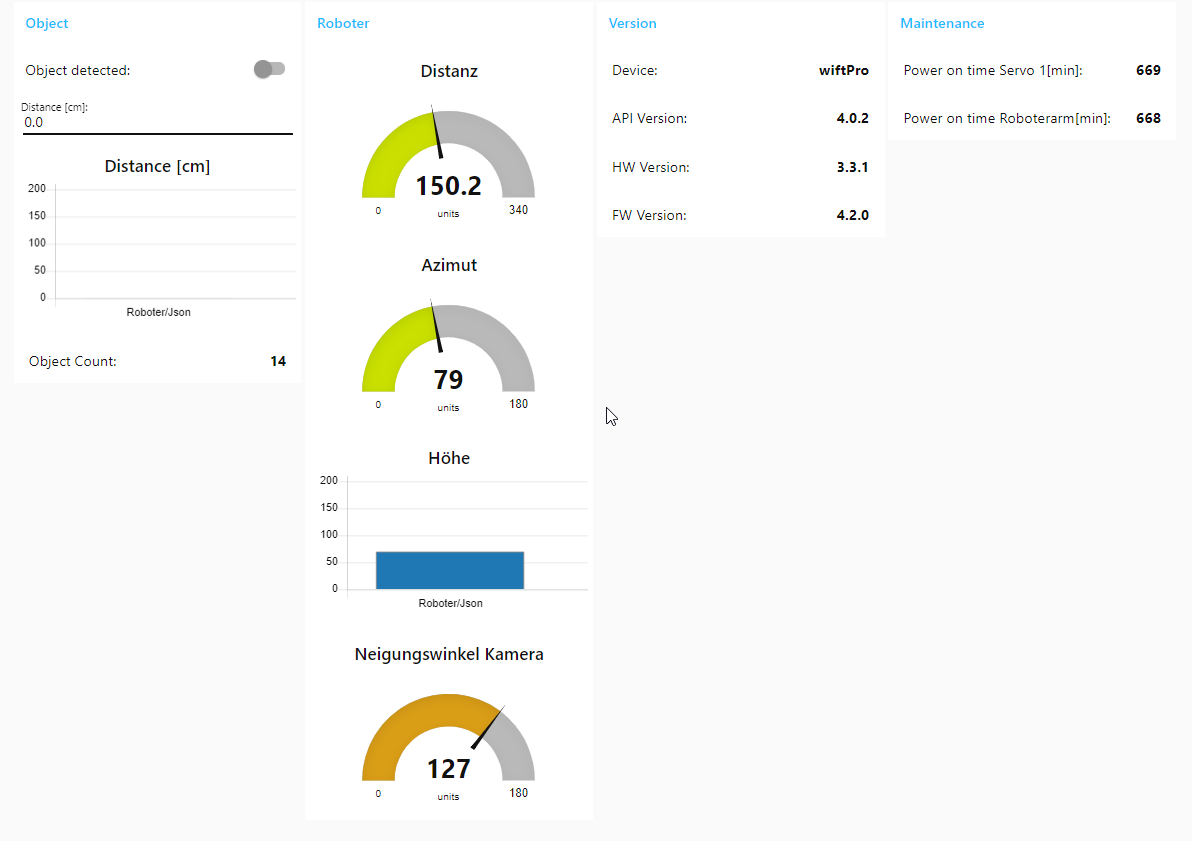

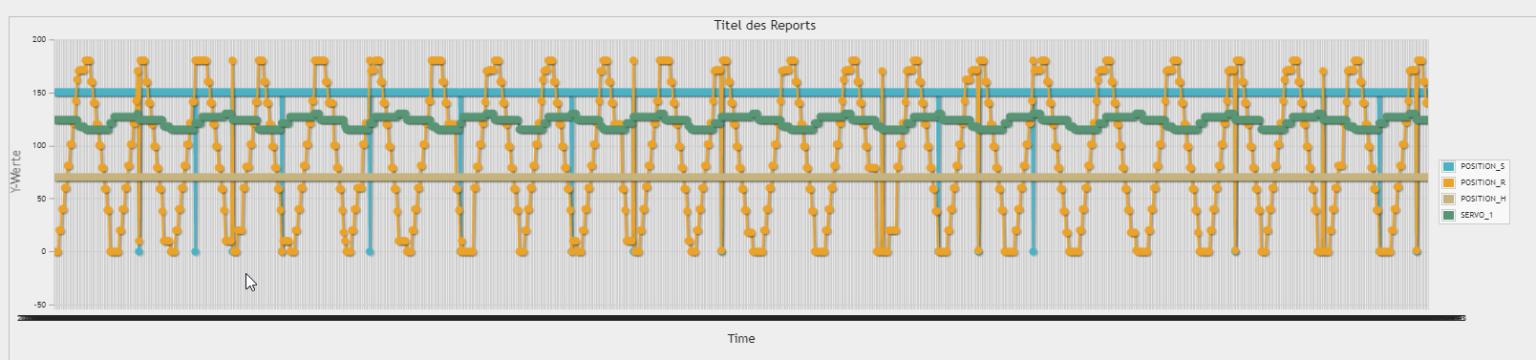

The Node-RED based dashboard displays the robot arm’s live data. Here, the real-time machine values such as the number of detected objects, the azimuth angle of the arm or the distance to the object can be monitored.

Since the dashboard’s layout is freely configurable, unwanted values can be hidden or additional values can be displayed as requested (such as the input from the measured values of the IOT sensor node).

Analytics & Data

As soon as you have collected sufficient data, you can illustrate and evaluate them over time.

This helps to detect correlations between different data streams during error analysis.

Example: a malfunction in the robot arm’s server motor after someone has hit the robot (= the vibration sensor of the IOT node has been triggered). Or a deterioration of the robot’s performance after it has overheated (= the temperature sensor already detected an increased robot temperature for a longer period of time)

Such information can, of course, not only be used for error analysis but also for error prevention. Simple pre-programmed reactions may be one way, e.g. reducing the speed of movement as long as the temperature sensor indicates an increased temperature.

Somewhat more complex, but all the more promising, may be an approach to predicting error conditions (keyword predictive maintenance). In this case the machine data itself would be monitored by a trained neural network. The basic principle is to recognize a specific machine error pattern and to send early warnings of an imminent malfunction or breakdown to allow reaction in good time.

Additional use-cases

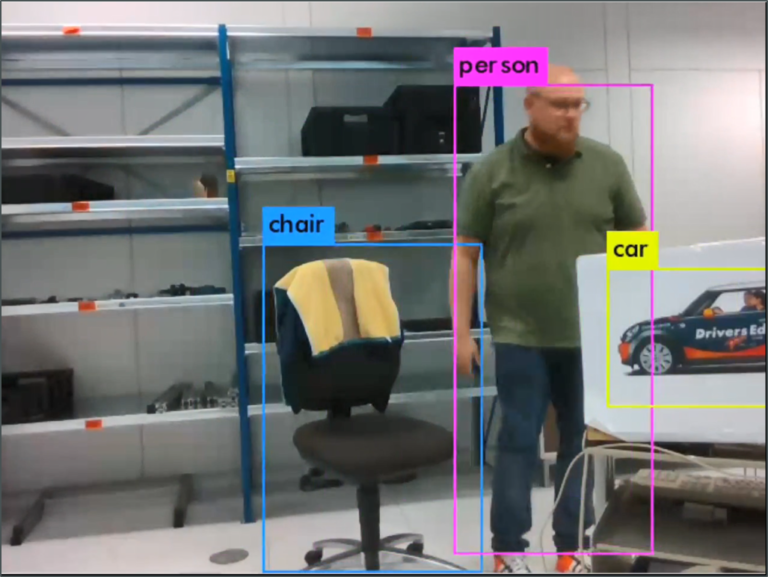

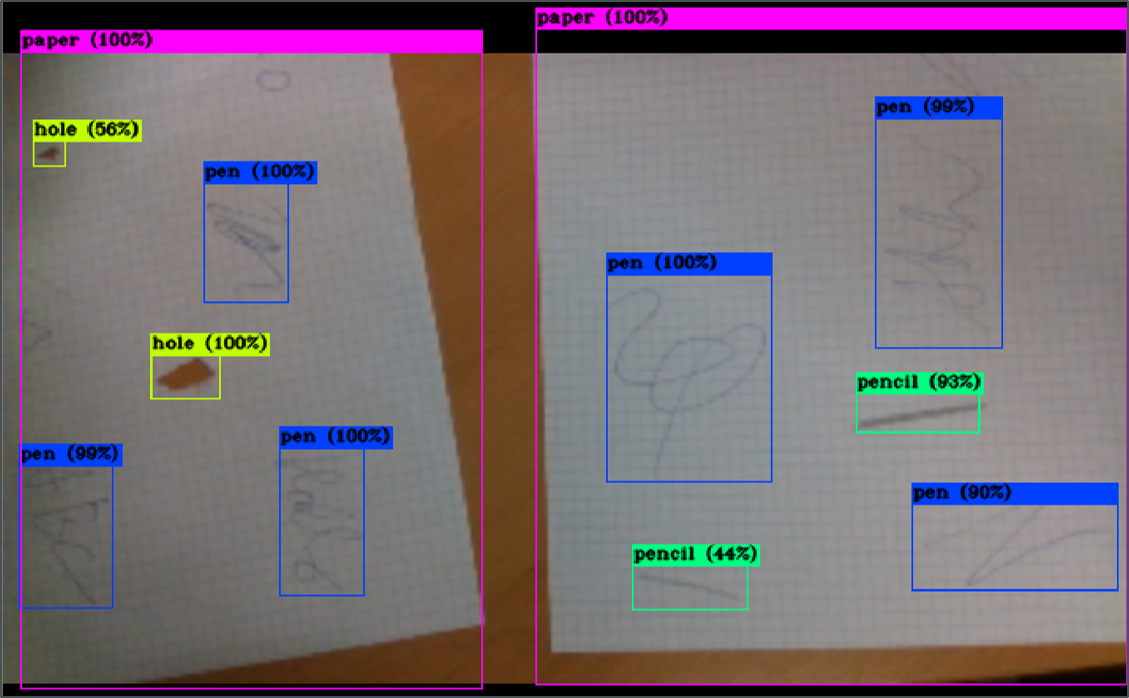

As mentioned in the beginning, this technology is very versatile. The trained objects do not have to be characters.

On the right you will find some examples for the recognition of people and other objects like chairs or vehicles.

To recognize an unknown new object, the neural network has to be trained again – as in our case to recognize our logo. Such re-training first requires a sufficiently large and high-quality test data set of images .

However, once the training has successfully been completed, almost any object can be recognized reliably – starting from face recognition to the identification of (partial) products in a factory during the production process.

When it comes to quality assurance, the possibility of detecting production errors in a product is certainly also interesting.

Another possible scenario would be clarification from the air in a chaotic or dangerous situation with the help of thermal images. For example, if people are missing in a forest fire and rescue teams have to assess whether or not they should enter an area although smoke is developing.

An additional example may be a system that actively warns people of danger, e.g. when entering a certain danger zone. Loading and unloading zones of autonomous storage robots in automated logistics areas are a perfect example.

Of course, there may be many more opportunities…

Together on to new horizons

Smart Navigation is exactly what you were looking for?

Maybe you already have the next challenge in mind?

Either way – we help you to find out if and how you can use this AI application for your benefit!

Our team is ready to support you – mail to sales@dmc-smartsystems.dmc-group.com or call (+49 89 427 74 177).